👋 Hi, it’s Rohit Malhotra and welcome to Partner Growth Newsletter, my bi-weekly newsletter doing deep dives into the fastest-growing startups and S1 briefs. Subscribe to join readers who get Partner Growth delivered to their inbox every Wednesday and Friday morning.

Latest posts

If you’re new, not yet a subscriber, or just plain missed it, here are some of our recent editions.

Subscribe to the Life Self Mastery podcast, which guides you on getting funding and allowing your business to grow rocketship.

Previous guests include Guy Kawasaki, Brad Feld, James Clear, Nick Huber, Shu Nyatta and 350+ incredible guests.

S1 Deep Dive

Cerebras in one minute

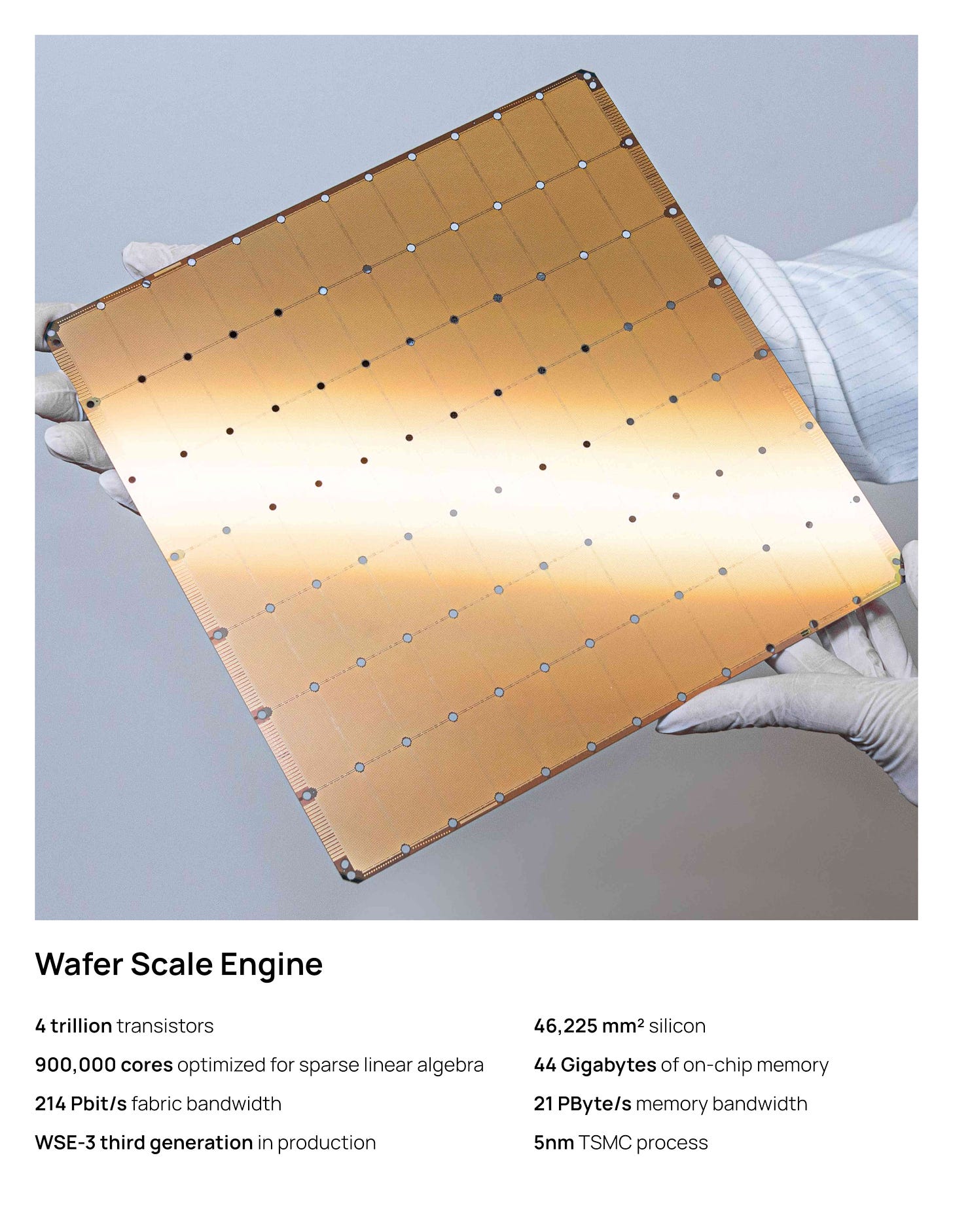

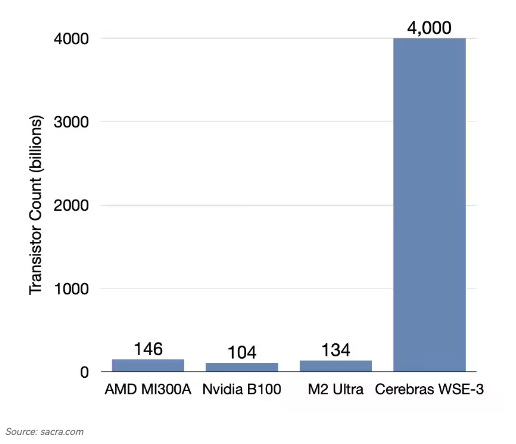

Cerebras is a cutting-edge chip maker taking on the AI hardware market, aiming to compete with industry giant NVIDIA in both AI inference and training. At the heart of Cerebras’ offering is the Wafer-Scale Engine (WSE), the world’s largest chip, boasting a surface area 57 times larger than traditional GPUs, enabling faster, more efficient AI processing.

Founded by a team of semiconductor veterans, Cerebras has embraced a fabless business model, leveraging TSMC to manufacture its revolutionary processors. Their hardware and software solutions are designed to simplify AI workloads, making AI more accessible for businesses.

While the company is tackling a fiercely competitive market, the sheer scale and innovation behind its technology could carve out a unique niche in the AI ecosystem.

To learn more about Cerebras' path to AI dominance, their fabless strategy, and how they stack up against NVIDIA, keep reading.

Introduction

Cerebras Systems made a significant impact on the semiconductor industry with its innovative approach to AI computing. Cerebras’ standout product, the Wafer-Scale Engine (WSE), is the world’s largest chip designed exclusively for AI workloads, marking a major shift in tackling AI compute challenges. Founded in 2016, the company had long pitched itself as something more than just a hardware maker. In 2019, it introduced the Wafer-Scale Engine (WSE), the largest chip ever built, and positioned it not as a tool but as a revolution. And while some might have questioned the practicality of its bold claims, others saw it as visionary—a step toward reshaping AI itself.

Recent Developments

Cerebras co-founder Andrew D. Feldman made headlines with his ambitious vision: to revolutionize the AI landscape by democratizing access to high-performance computing. "We're not just building chips; we're changing the way organizations can leverage AI," Feldman proclaimed, capturing the ethos of the company’s mission.

However, unlike other tech disruptors, Cerebras operates in a niche space where success is defined not just by innovation but by the practical application of its technology. Behind the sleek veneer of its user-friendly AI platforms lies a complex reality—one where scalability and efficiency meet the rigorous demands of industry clients.

The company's growth has been propelled by its early adoption among leading enterprises and sovereign AI initiatives. The demand for its WSE chips surged as businesses sought to harness the power of AI for applications ranging from data analysis to real-time processing. This rapid uptake reflects a significant market shift, with Cerebras positioning itself as a key player against giants like NVIDIA, which has dominated the GPU landscape for years.

Despite the excitement, Cerebras faces scrutiny regarding its revenue model, particularly its reliance on high R&D spending and the challenge of maintaining profitability. The company's heavy investments, amounting to $140 million in R&D in 2023 alone, underscore the delicate balance between innovation and financial sustainability. While the WSE’s capabilities set it apart, questions remain about the long-term viability of its business model amidst increasing competition and regulatory pressures.

Investor Sentiment

Investor sentiment surrounding Cerebras is notably divided. On one side, the bulls champion the company as a revolutionary force poised to redefine AI computing. They highlight the expansive market opportunities fueled by a growing user base eager to adopt advanced AI technologies. For these optimists, Cerebras isn’t merely a semiconductor company; it’s the architect of a new age in AI, where high-performance computing is accessible to a broader audience.

The platform's diverse product offerings—including the WSE-3 and upcoming solutions—alongside partnerships with prominent tech firms, further bolster investor confidence. Supporters point to the rapidly expanding AI market, expected to grow from $131 billion in 2024 to $453 billion by 2027, as fertile ground for Cerebras’ growth.

Conversely, skeptics raise red flags about the sustainability of Cerebras’ financial model. They cite the high operational costs associated with its ambitious R&D strategy and express concerns regarding the volatility of the AI market. Additionally, the significant competition from established players like NVIDIA looms large, raising questions about Cerebras’ ability to maintain its competitive edge.

As the AI landscape evolves, Cerebras embodies a dual narrative of innovation and caution. The company stands at a critical juncture—its mission to democratize AI computing is ambitious, but whether it can achieve that vision while navigating the complexities of profitability and market competition remains to be seen. In the ever-shifting realm of tech stocks, Cerebras Systems represents both a beacon of potential and a testament to the challenges that lie ahead.

History

Cerebras Systems, founded in 2016, aimed to tackle the biggest challenge in AI compute: the inefficiencies and limitations of GPUs. Traditional GPUs, originally designed for graphic rendering, were struggling to keep up with the demands of AI training and inference workloads. Cerebras, led by Andrew D. Feldman, envisioned a groundbreaking alternative—Wafer-Scale Integration (WSI).

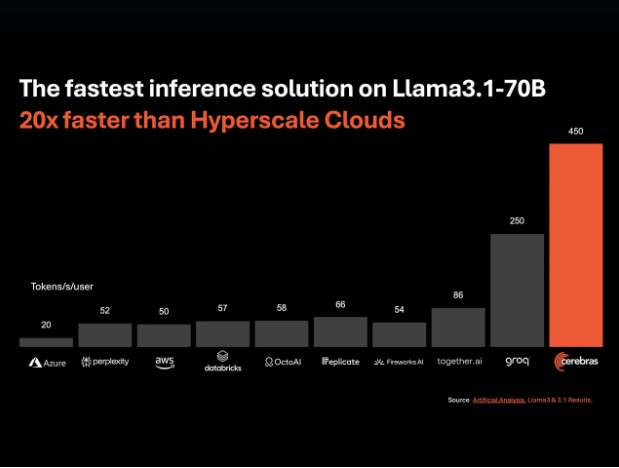

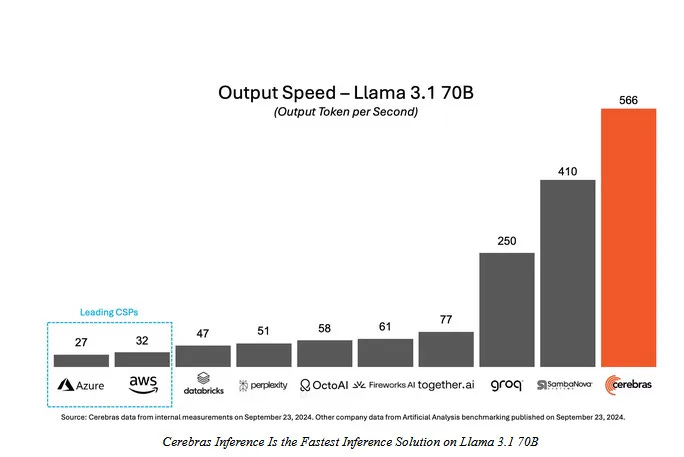

In 2019, Cerebras launched the Wafer-Scale Engine (WSE), a 1.2 trillion transistor chip, 57 times larger than the largest GPU. This bold innovation put Cerebras on the map. The company has since released three generations of the WSE, with the WSE-3 leading the market today. The WSE-3 has 900,000 AI-optimized cores, 44 GB of on-chip memory, and 21 petabytes per second of memory bandwidth—unmatched by any other AI processor on the market.

Cerebras’ journey from an ambitious startup to a leading AI hardware company has been driven by this focus on WSI, positioning it as a significant player in the AI race alongside NVIDIA, Google, and other AI chip manufacturers.

Market Opportunity

The AI market is growing exponentially, driven by the rise of Generative AI (GenAI) and large language models (LLMs) like ChatGPT, Google’s Gemini, and Meta’s LLaMA. According to Bloomberg Intelligence, the AI compute market will expand from $131 billion in 2024 to $453 billion by 2027, a 51% compound annual growth rate (CAGR).

Cerebras is particularly well-positioned in two high-growth segments: AI Training and AI Inference.

The AI training market alone is expected to reach $192 billion by 2027, growing at a 39% CAGR, driven by the need for more computational power to train increasingly larger and more complex models like GPT-4, GPT-5, and emerging generative AI models. Training AI models requires massive computational resources, often demanding thousands of GPUs. Cerebras’ wafer-scale architecture allows for faster, more efficient training without the need for thousands of interconnected GPUs.

AI Inference, which refers to deploying AI models in real-time such as answering queries or making predictions in applications like chatbots, virtual assistants, autonomous vehicles, and healthcare diagnostics, is forecast to grow even faster, from $43 billion in 2024 to $186 billion in 2027, at a 63% CAGR. Cerebras’ system is ideally suited for inference as it can process real-time requests at high speed and low latency.

Cerebras targets large enterprises, national research labs, and government agencies looking to accelerate AI development. G42, a sovereign AI initiative based in the UAE, is one of its key clients, underscoring its appeal to governments aiming to harness AI’s power.

In terms of geography, Cerebras is focusing on expanding into markets such as life sciences, materials science, and finance, where AI compute demand is set to grow rapidly. As AI continues to penetrate new industries, Cerebras' TAM is likely to increase even further.

Product

The Cerebras Wafer-Scale Engine (WSE-3) is the core of the company's product line. It is the largest and most powerful AI chip ever built, designed specifically for AI training and inference tasks. Its wafer-scale design allows it to avoid the inefficiencies of connecting multiple GPUs, a challenge that has plagued AI researchers for years. The WSE-3’s 21 petabytes per second of memory bandwidth and 900,000 cores enable it to process vast amounts of data without needing to split computations across many chips. This design has allowed some customers to experience 10x faster AI training times compared to NVIDIA’s H100 GPUs.

For instance, the WSE-3 consumes significantly less power than traditional GPU clusters, leading to both cost savings and sustainability benefits.

In addition to its hardware, Cerebras offers the CS-3 System, a fully integrated AI system that includes the WSE-3 chip, power and cooling mechanisms, and proprietary software (CSoft). The Cerebras AI Supercomputer, which connects up to 2,048 CS-3 systems, can handle even the largest AI models. Its scalable architecture and flexibility allow customers to either train models on-premise or deploy them through Cerebras Cloud.

Business Model

Cerebras operates a hybrid business model. Customers can purchase its hardware for on-premise deployment or use its cloud-based AI compute on a consumption model. G42’s Condor Galaxy Cloud, for example, provides AI compute services powered by Cerebras technology to clients worldwide.

Cerebras has a strong focus on enterprise AI solutions, providing both hardware and cloud-based solutions to meet customer needs. Its AI systems are ideal for customers who need complete control over data security (on-premise deployments) as well as for those who prefer the flexibility of pay-as-you-go cloud models. The company’s cloud customers typically include research institutions, financial firms, and government agencies.

Moreover, Cerebras offers professional AI model training services, where it works with customers to optimize AI workflows and speed up AI development. These services, alongside its hardware and software, create an end-to-end AI solution that simplifies AI deployment and development.

Revenue Breakdown:

Hardware Sales: Enterprises that require data privacy and control over their AI compute infrastructure can purchase Cerebras systems to be deployed in their own data centers. These customers include pharmaceutical companies, defense contractors, and sovereign AI programs.

Cloud-based Solutions: Cerebras also offers a cloud-based AI solution through partnerships, such as the Condor Galaxy Cloud powered by G42. Customers can scale AI workloads flexibly and benefit from WSE-3's performance without managing physical infrastructure.

Services and Support: In addition to hardware, Cerebras generates revenue from providing AI consulting services and support contracts, assisting companies with AI model training and system deployment.

In 2023, Cerebras generated $78.7 million in revenue, up from $24.6 million in 2022, showing impressive growth as its products gained traction in the market. For the first half of 2024, revenue surged to $136.4 million, indicating a rapidly growing demand for its systems.

Management Team

Cerebras boasts a world-class leadership team, with deep expertise in semiconductors, AI, and business strategy. Here’s a closer look at the leadership:

Andrew Feldman (CEO and Co-founder): Feldman is a veteran of the semiconductor industry, having previously founded SeaMicro, which AMD acquired for $334 million. He leads Cerebras' long-term vision and strategy, focusing on innovation and market expansion.

Sean Lie (Co-founder, Chief Hardware Architect): Sean leads the hardware design team and is the mastermind behind Cerebras’ revolutionary wafer-scale chip architecture. He brings decades of experience in integrated circuit design and semiconductor architecture.

Michael James (Co-founder, Senior VP of Engineering): Michael is responsible for the engineering efforts at Cerebras, overseeing product development from concept to market deployment. He ensures Cerebras remains on the cutting edge of AI hardware innovation.

Shirley X. Li (General Counsel): Shirley manages all legal and compliance issues at Cerebras, leveraging her extensive background in corporate law. She ensures Cerebras navigates complex regulatory challenges as it expands globally.

Gary Lauterbach, CTO, brings decades of experience in system architecture and hardware development. His expertise in scaling complex architectures has been critical in advancing Cerebras’ wafer-scale designs.

Investor and Ownership Structure

Since its founding in 2016, Cerebras Systems has raised over $809 million across multiple funding rounds, attracting top-tier venture capital firms and sovereign AI initiatives. Here’s a breakdown of the key funding rounds:

May 2016: Series A

Amount Raised: $26.97 million

Issue Price: $0.85 per share

Post-Money Valuation: $67.43 million

Key Investors: Benchmark Capital, Foundation Capital, Open Field Capital

This initial funding gave Cerebras the financial backing to develop its Wafer-Scale Engine (WSE) and assemble a core team.

December 2016: Series B

Amount Raised: $25 million

Issue Price: $2.75 per share

Post-Money Valuation: $249.79 million

Key Investors: Benchmark Capital, Eclipse Ventures

Series B funding enabled Cerebras to accelerate WSE-1 development and expand its engineering team.

August 2017: Series C

Amount Raised: $65 million

Issue Price: $8.95 per share

Post-Money Valuation: $860.24 million

Key Investors: Benchmark Capital, Eclipse Ventures, Sequoia Capital

This round supported scaling production and delivering WSE chips to early customers.

November 2018: Series D

Amount Raised: $79.82 million

Issue Price: $16.15 per share

Post-Money Valuation: $1.7 billion

Key Investors: Altimeter Capital, VY Capital, Coatue Management

Series D allowed Cerebras to increase production and prepare for larger deployments in key sectors like research and government.

November 2019: Series E

Amount Raised: $273.35 million

Issue Price: $18.32 per share

Post-Money Valuation: $2.42 billion

Key Investors: Undisclosed Investors

This significant round fueled the launch of the second-generation WSE-2 chip, pushing Cerebras into large-scale commercialization.

November 2021: Series F

Amount Raised: $254.38 million

Issue Price: $27.74 per share

Post-Money Valuation: $4.16 billion

Key Investors: Alpha Wave Ventures, Abu Dhabi Growth Fund, G42

This round marked a strategic inflection point, attracting key investors like G42 and setting Cerebras up for further growth.

September 2024: Series F-1

Amount Raised: $85 million

Issue Price: $14.66 per share

Post-Money Valuation: $2.84 billion

Key Investors: G42

Ahead of its anticipated IPO, Cerebras raised another $85 million from G42 to fuel its growth strategy.

Cerebras has drawn investments from major players like Benchmark Capital, Altimeter Capital, and Coatue Management. Among its key stakeholders, G42 is particularly prominent, not only as a top customer but also as a major shareholder, holding 22.9 million Class N shares and an option to purchase more.

As Cerebras prepares for its public offering, it stands poised for significant revenue growth, largely driven by its partnership with G42 and sustained investor confidence.

G42 relationship

G42, an Abu Dhabi-based AI and cloud computing holding company owned by Mubadala Capital, plays a pivotal role in Cerebras’ business. With Microsoft investing $1.5 billion in G42 earlier this year, the company’s relationship with Cerebras is multi-faceted, spanning customer, investor, and partner roles.

G42 is Cerebras’ largest customer, contributing 83% of the company’s revenue in 2023 and 87% in the first half of 2024, with spending of $65M in 2023 and $118M in H1 of 2024. G42 has committed to purchasing $1.43 billion worth of Cerebras hardware and services by February 2025. While the timing for recognizing some of that revenue is still unclear, this deal signals significant future growth for Cerebras. Notably, Mohamed bin Zayed University of Artificial Intelligence has already agreed to a $350M portion of this commitment.

G42 acquired a 1% stake in Cerebras during its Series F round in 2021 with a $40M investment. The company is set to invest an additional $335M in shares by mid-2025. Furthermore, G42 has the option to buy more shares at a 17.5% discount based on their customer order volume.

G42 also partners with Cerebras by offering Cerebras’ AI and cloud services on its Condor Galaxy Cloud platform, expanding both companies’ cloud computing capabilities. Cerebras provides similar services on its own cloud.

In short, G42 is the driving force behind Cerebras' current success. With substantial purchase commitments and strong ties, Cerebras is poised for significant revenue growth in the coming years, largely thanks to G42’s continued support and collaboration.

Competition

Cerebras operates in a fiercely competitive market, with NVIDIA being its most formidable rival. NVIDIA’s H100 GPUs dominate the AI hardware space, and its CUDA software ecosystem gives it a significant advantage. However, Cerebras’ Wafer-Scale Engine offers a compelling alternative, particularly for customers dealing with large-scale AI training.

Other competitors include Graphcore, which focuses on developing innovative AI processors for specific AI tasks, and Google’s Tensor Processing Unit (TPU), which is widely used in Google’s cloud infrastructure. Despite the competition, Cerebras differentiates itself by simplifying AI compute with its massive WSE chips, avoiding the need for complex multi-GPU architectures.

Financials

Cerebras Systems has demonstrated rapid growth, primarily driven by its partnerships with sovereign AI initiatives and enterprise clients. The company’s financial performance shows both the opportunities and challenges it faces as a deep-tech startup.

Growth Overview

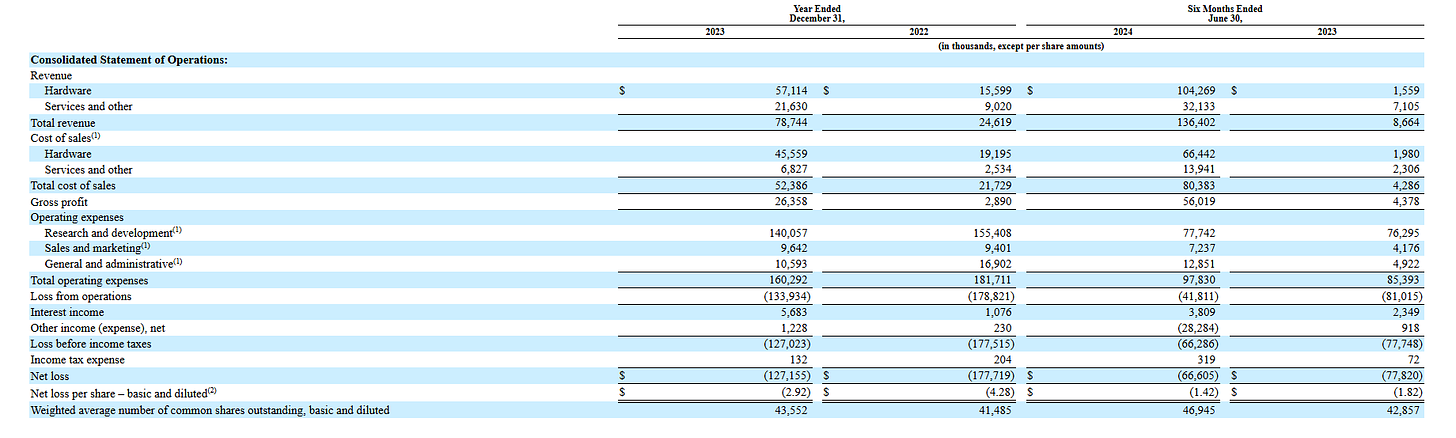

Cerebras has seen substantial revenue increases over the past few years. In 2023, it reported $78.7 million in revenue, up from $24.6 million in 2022, representing an impressive 220% year-over-year increase. The company's partnerships, particularly with G42, were instrumental in this growth, with G42 contributing 83% of total revenue. In the first half of 2024, Cerebras further accelerated, posting $136.4 million in revenue, already surpassing its 2023 full-year revenue.

Revenue Breakdown

2023: $78.7 million (220% YoY growth)

Hardware revenue: $57.1 million

Services and other revenue: $21.6 million

First Half of 2024 (H1 2024): $136.4 million

Hardware revenue: $104.3 million

Services and other revenue: $32.1 million

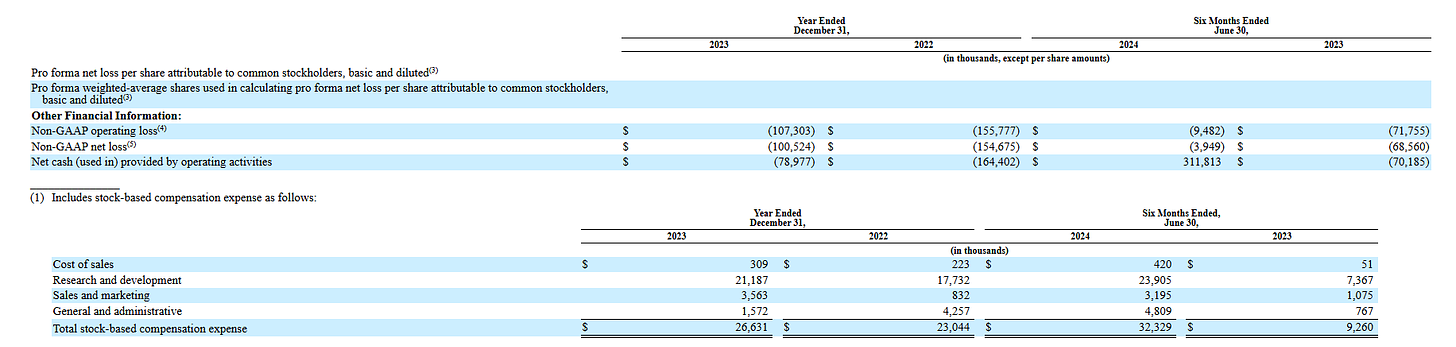

Losses and Investment

Despite strong revenue growth, Cerebras continues to operate at a loss, mainly due to its high levels of R&D and operational expenses. In 2023, the company reported a net loss of $127.2 million, a reduction from $177.7 million in 2022, representing a 28% decrease in losses. By June 2024, it recorded a $66.6 million net loss, further shrinking its losses by 14% year-over-year.

R&D Investment

Cerebras' success depends heavily on continuous innovation, which is reflected in its heavy investment in research and development:

2023 R&D expenses: $140.1 million

First Half of 2024 R&D expenses: $77.7 million

These investments focus on advancing the company’s Wafer-Scale Engine (WSE) technology and expanding its AI systems, a critical component of its growth strategy.

The Road Ahead: IPO and Expansion

Cerebras is positioning itself for an initial public offering (IPO) that will likely provide fresh capital to fuel its ambitious goals. The IPO will be critical for:

Further development of its AI compute platform

Expanding cloud offerings

Increasing market reach through strategic partnerships

While Cerebras remains in the red, its losses are shrinking as revenues accelerate, illustrating the potential for future profitability. As it continues to forge ahead in the AI hardware space, the company’s deep-tech approach is setting the stage for long-term growth and market disruption.

Closing thoughts

Cerebras' entry into the public market comes at a critical juncture, with AI hardware demand surging and investor appetite for high-growth companies in this space peaking. The company's decision to IPO now reflects a strategic move to capitalize on its technological advancements, even as it faces significant hurdles. While Cerebras has positioned itself as an innovator with unique hardware breakthroughs like wafer-scale engines and unstructured sparsity support, the challenges outlined—such as compiler limitations, economic viability in AI inference, and reliance on a single major customer—raise questions about its long-term trajectory.

The timing of the IPO creates an opportunity for Cerebras to capture the attention of investors eager for exposure to AI infrastructure plays, even as doubts linger about its ability to scale and diversify its revenue streams. Just as companies like Nvidia have benefited from investor enthusiasm around AI, Cerebras aims to leverage its differentiated hardware narrative. Yet, much like other high-growth tech IPOs, its future success will depend on overcoming technical limitations and navigating a complex competitive landscape, with potential for both massive growth or steep decline depending on execution.

The next few years will be critical for Cerebras to prove its scalability and value beyond its current customer base, making it a fascinating company to watch as AI demand accelerates globally.

If you enjoyed our analysis, we’d very much appreciate you sharing with a friend.

Tweets of the week

Best way to look at failures: This too shall pass!

I have found it takes 3x longer for me!

Here are the options I have for us to work together. If any of them are interesting to you - hit me up!

Get my free sales course: Click here to receive a 5-day educational email course on how to get high-ticket enterprise clients

Subscribe to my YouTube channel: Your Learning Playground with over 350+ podcasts. Previous guests include Guy Kawasaki, Brad Feld, James Clear, and Shu Nyatta.

Sponsor this newsletter: Reach thousands of tech leaders

And that’s it from me. See you on Friday.

What do you think about my bi-weekly Newsletter? Love it | Okay-ish | Stop it